AI for Earthquake Response

Challenge closed

Rules

For your submitted solution to the challenge to be eligible, the following rules need to be adhered to.

Phase 2 rules and procedure

Stress Test - Phase 2 Rules

Phase 2 of the AI Model Challenge for Disaster Response is designed to simulate a real International Charter activation, where teams must respond quickly and accurately to a new earthquake event. Teams will have 10 days to take images and building polygon files from new areas not seen in phase 1 and run their models to predict the damaged buildings. Please read the following rules carefully.

Dataset

- Phase 2 uses an entirely new dataset which is hosted separately on EOTDL here: https://www.eotdl.com/datasets/charter-eo4ai-etq-challenge-testing

- Please note that only data from this new dataset is viable to be used for your submissions for Phase 2!

- If you have access to the Phase 1 dataset your access to the Phase 2 dataset will be transferred automatically. If you have trouble accessing it, please shoot us a message.

Timeline

- Start Date: 5 September 2025, 17:00 CEST

- End Date: 15 September 2025, 17:00 CEST

- Duration: 10 days

Scoring Weight

- Phase 2 contributes 60% of the final global score.

- Phase 1 results contribute the remaining 40%.

- Final rankings will be determined after combining both phases.

Submissions

- Submissions will work in the same manner as phase 1 - Teams must submit results as a single .zip file containing predictions for the Phase 2 test sites.

- Predictions must:

- Include the city name in the file name

- Include the same number of buildings as in the provided polygon files

- Fill in the damage field for each building (damaged or undamaged)

Submission Limits

- Each team is allowed a maximum of 1 submission per day during Phase 2.

- Only the best score among a team’s submissions will count towards the final evaluation.

Leaderboard Visibility

- In Phase 2, the public leaderboard will only show a subset of the submission results, this will prevent teams from overfitting their models to the test data.

- Teams will not know their exact positioning until the end of the challenge. Teams will be limited to 1 submission per day over the 10-day testing period. On September 15th, the challenge will close.

- Phase 2 scores will be computed based on an average of the two sites.

- Teams will only see their final combined score (Phase 1 + Phase 2) on the official leaderboard released after 15 September.

- This ensures fairness and prevents “trial-and-error” optimization.

Key Points to Remember

- You have 10 days to deliver predictions for completely new, unseen earthquake areas.

- This phase is meant to test your model’s generalization ability under real-world conditions.

- Plan your submissions carefully – with only 1 attempt per day, quality matters more than quantity.

Timing and duration

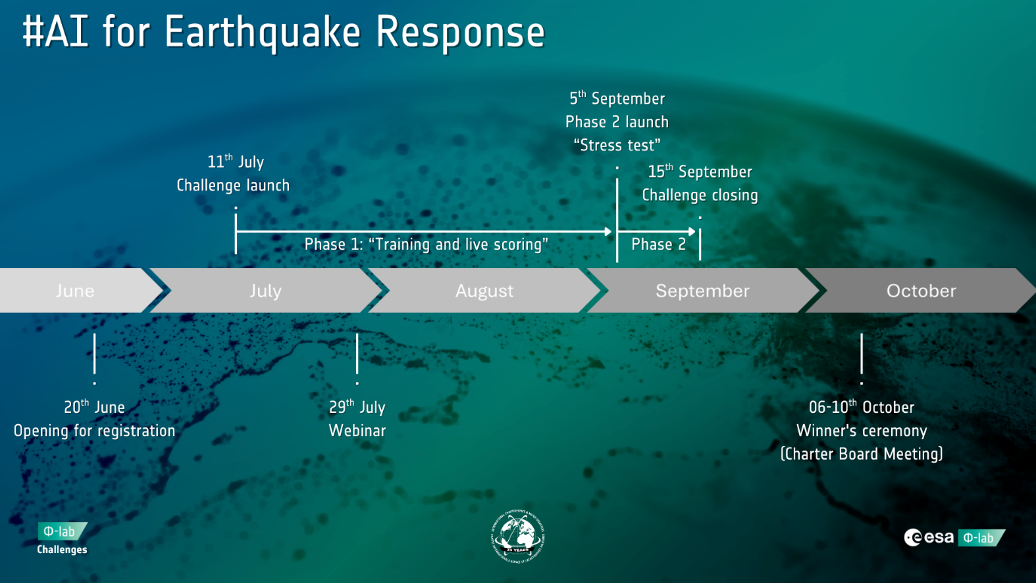

The AI for Earthquake Response Challenge will open for pre-registries starting from the soft launch on 20 June 2025. Please note that full details and access to the dataset will only be provided after the official hard launch on 11 July 2025.

Challenge timeline and deadlines

- Pre-launch (open for registrations): 20 June 2025

- Full launch (dataset access): 11 July 2025, 16:00 CEST

- Introductory webinar: 29 July 2025 (more information coming soon), 18:00 CEST

- End of phase 1 (submission deadline for phase 1) and launch of phase 2: 05 September 2025, 17:00 CEST

- End of phase 2 and final submission deadline: 15 September 2025, 17:00 CEST

- Announcement of winners: 30 September 2025

- Winner’s ceremony: 07-08 October 2025 at the 54th Charter Meeting in Strasbourg, France

Challenge format & scoring

The challenge will use a two-part evaluation process:

Phase 1 submission format

Participants will submit a single .zip file containing their prediction files (edited Geopackage file given) for each earthquake site. Each prediction file must assign a damage label to all buildings in the provided polygon file.

Only a subset of buildings will be scored, but participants won’t know which ones, this encourages consistent performance across the full dataset.

Phase 1 scoring:

F1 scores will be computed per site to give an idea of model performance over each of the different sites. The global F1 score will be that of all of the submitted data added together. Both scores will be visible on the public leaderboard, but the global score will determine positioning.

Phase 2 submission format

Participants will again submit a .zip file with their prediction files, which are now applied to completely new images and building polygon files. The “damage” field will be left blank in the provided files; participants must infer the complete annotation using their trained model.

Phase 2 scoring:

Evaluation will again use F1 scores with the same methodology as phase 1. Performance in this phase carries greater weight in the final ranking, as it tests model robustness in unfamiliar territory.

Scoring & Evaluation

Your final score will be based on a combination of your performance in both phases:

- 40% - Phase 1 Live Scoring Results (How well your model completed the partially annotated images)

- 60% - Phase 2 Stress Test Results (How accurately your model identified damaged buildings in fully unseen areas)

These weights ensure that participants are rewarded for tuning their models well but ultimately prioritize field-readiness and generalization ability.

Why This Matters

This weighted scoring ensures that the most robust and generalizable models rise to the top, those that don’t just perform well in a controlled environment but can truly make a difference in the field of rapid disaster response.

Earthquakes strike without warning. In the hours that follow, every minute counts. This challenge reflects the urgency, uncertainty, and pressure of real disaster response. It’s not just about accuracy, it’s about resilience, adaptability, and impact.

Are you ready to put your model to the test?

Participants

Participants from all over the world can participate in the challenge. You must be of legal age in your jurisdiction to participate.

Please note that some of the input data is subject to access restrictions and access to the dataset can be prohibited to certain jurisdictions.

Teams

Each participating team must designate a team leader, who will serve as the primary point of contact between the organisers and the team.

Participants are only allowed to join one team throughout the duration of the Challenge. Teams are not permitted to create duplicate or secondary entries.

To ensure fairness and transparency, we kindly ask that participants from the same public organisation or private company form a single team. If multiple teams from the same entity appear to be collaborating, the ESA Φ-lab Challenge organisers reserve the right to require them to merge into one team. This rule is in place to prevent unfair advantages and maintain a level playing field for all.

If you are planning to join the Challenge with colleagues from your workplace or academic institution, please coordinate and register as one unified team per entity. Note: In the case of large institutions (e.g. universities), it may not be feasible for all individuals to coordinate. If teams can demonstrate they are not cooperating, they may be exempt from this rule.

The organising team reserves the right to request the deletion of any duplicate registrations or submissions.

Solution presentation

The winners of the AI for Earthquake Response Challenge will be publicly announced during the 54th Charter Meeting in Strasbourg, taking place the 06–10 October 2025. The exact date of the award presentation will be communicated to the winning teams at a later stage. The winning team will be invited to Strasbourg for the 7 and 8 October and are invited to a dinner celebrating the 25th anniversary of the Charter. This dinner will be attended by Charter member representatives and other distinguished guests from the earth observation and humanitarian communities. Full teams are welcome to attend the meeting and present their work, however only two places at the dinner can be reserved for the winning challengers.

To showcase their solutions and ensure transparency, winning teams will be invited to give a 10-minute pitch-style presentation of their work. This presentation should be supported by a concise pitch deck (e.g., a PowerPoint or similar format).